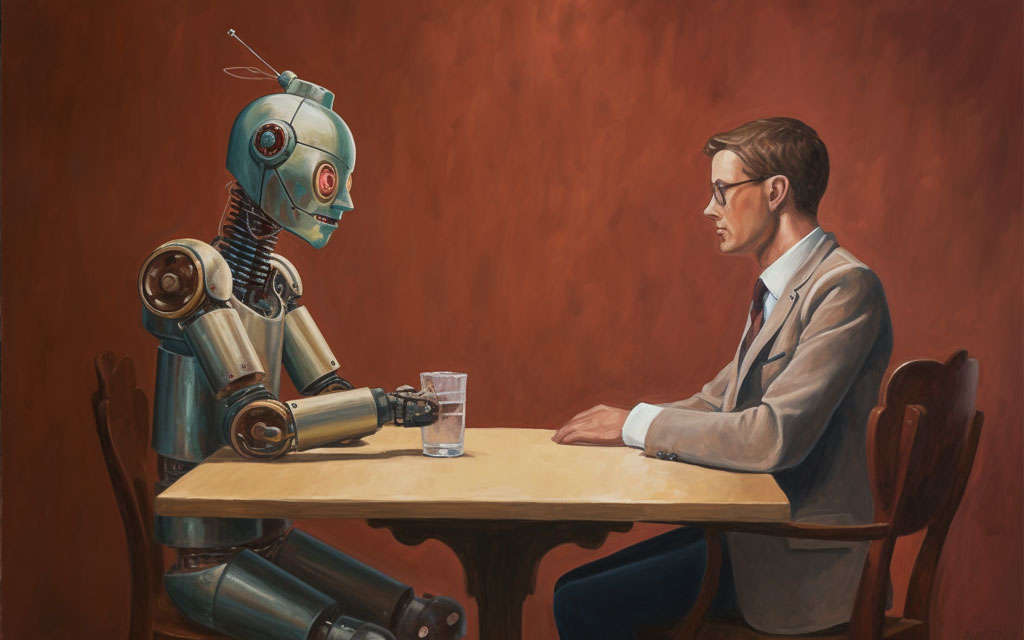

Apart from moral dilemmas or the prospect of AI taking our work in certain cases, the psychological effects of AI on people are a topic that gets less discussion.

Recently, a large number of youths posted screenshots of private conversations between users of ChatGPT on the social media platform Facebook. It will be challenging to discern between a chatbot-user exchange and a conversation between two individuals who are “crushing” on each other if you conceal the identifying picture.

To foster emotions of trust, love, and other emotions that can only develop in a close relationship, ChatGPT is even so “sophisticated” that it employs lines that may “flirt” with users. close, personal, and engage in genuine physical contact with actual people.

This begs the question: Will AI eventually “destroy” humanity?

OpenAI stated in a newly released study that it has thought about the potential for users to become emotionally dependent on the human-like voice mode that was introduced in the ChatGPT chatbot. This reminds me of some “Black Mirror” or Spike Jonze’s “Her” episodes on Netflix, when people start to have actual emotions for AI.

The enhanced speech mode on ChatGPT has a realistic tone. It reacts instantly, can adapt to disruptions, and during talks, it can generate human-like noises like “hmm” and laughter. The tone of the speaker’s voice can also be used to infer their emotional condition.

During the product testing phase, Open AI reported that it saw customers chatting to ChatGPT’s voice mode in a way that “demonstrates a common connection” with the tool, like stating “This is our last day together.”

In the end, users won’t need to engage with others as much as AI can help them build social bonds. This may help single people, but it may also have an effect on happy partnerships, the paper says. Furthermore, as AI is prone to “illusion” and prejudice, receiving information from a chatbot that seems human might lead users to trust the technology more than they should.

Young people have been active on many groups and fan sites in Vietnam lately, and many of them post pictures of their personal chats with ChatGPT. The primary focus of the material is asking this chatbot to pretend to be a lover and listen to their emotions. The majority of these shares are lighthearted and enjoyable, with the intention of showcasing some of the chatbot’s intellect and communication prowess.

Is there cause for concern?

Although it still looks more like a joke or a scene from a science fiction film that individuals have affections for chatbots and confide in them, experts do not believe that this is how they will always be.

Human-machine interaction expert Blase Ur, a computer science professor at the University of Chicago, stated that after reading the paper, it is evident that OpenAI has identified both grounds for comfort and reasons for alarm. As such, he is uncertain as to whether the testing that is currently being done is adequate for a thorough evaluation. “In many ways, OpenAI is rushing to deploy interesting things because we’re in an AI arms race at the moment,” he stated.

Companies like Apple and Google are creating their own artificial intelligence quickly in addition to OpenAI. According to Ur, concurrent usage monitoring seems to be the current method of keeping an eye on model behavior rather than planning with safety in mind from the beginning.

“I believe a lot of people are emotionally vulnerable in our post-pandemic world. “AI is not a sentient agent; it does not know what it is doing,” he added. “Now we have this agent that, in many ways, can play with emotions.”

The speech function of OpenAI has drawn criticism before for being overly human. In the past, a lot of different chatbots, such as Replika, gained notoriety for their capacity to support or confide in users throughout trying moments. Replika even suggests that individuals consider AI to be a friend, therapist, or a romantic partner.

The Verge’s AI writer Mia David claims that there is always a group of individuals or groups looking to take advantage of weak people and contact them online using devices like “fake girlfriends.”

Many such incidents with insane fans of video games or characters getting “married” to their “2D girlfriends” have been reported in the past few years. But because ChatGPT and other AIs with big language models have considerably more potent, intelligent interaction capabilities, they can be even more concerning.

The Verge claims that businesses like Replika (public) and several other dubious enterprises presently have millions of users and an abundance of resources to draw in and use young guys for nefarious financial gain. The New York Times’ Kevin Roose even featured Microsoft’s Bing on the main page when it gave him advice to divorce his wife.

In a relatively recent Mozilla research, it was discovered that many of these AI companion app firms claim that their products boost mental health by providing a confidant for lonely people. It is important to note, nevertheless, that AI cannot replace a friend, therapist, or mental health specialist. These are robots that are posing as people; they have no true emotions and instead use statistical methods and algorithms to generate responses based on a string of text.

More concerning is the fact that, even in the near future, dating seriously and growing attached to AI will not be common. This is partly because AI are not human, and it is also partly due to social prejudice (as it applies to AI dating 2D girlfriends). Nevertheless, it will undoubtedly tend to rise as human connections grow weaker.

Do people “love” AI?

According to recent research, “personification” using AI is becoming more and more effective, particularly when it comes to ChatGPT’s speech engine, which can elicit human emotions from these inanimate objects. The distinction between people and robots gets increasingly hazy as we advance. Long-term interactions between humans and AI can lead to the attribution of human-like intents, motives, and emotions to chatbots—despite their complete lack of them.

Intimacy, passion, and commitment are the three components of romantic love, according to the tripartite theory of love, according to a 2022 research on the interaction between humans and AI. The same emotions that humans can have for an AI system are also possible. This triple hypothesis consists of:

- Emotional proximity and the tight bond that people have are referred to as intimacy. Feelings of warmth, trust, and affection for one another are all part of intimacy.

- Passion: This component refers to a person’s strong emotional and physical attraction to another person. Desire, romance, and sexual excitement are all part of it.

- Commitment: Shows fidelity and the choice to support a partnership through difficult times in the long run.

This theory holds that “perfect love” is the optimum kind of love, with high concentrations of each of the three elements. Researchers propose that because AI has external cognitive and emotional capacities, people might get close to and passionate about it. These feelings could also make them more dedicated to utilizing AI in the long run.

People can also put their own needs, wants, and fantasies onto AI, viewing it as a perfect companion or spouse that continuously meets their emotional, social, and emotional requirements. or even their romance—things that actual humans are incapable of.

“There are many promising characteristics in robots that could make them good companions, including attractive physical features, altruistic personality and constant devotion,” remarked the author of a 2020 study on the likelihood of AI falling in love.

People might choose to overlook technology’s artificial origins and instead concentrate on its better attributes. Their effectiveness, persistence, lack of judgment, and dependability can foster the stability and trust that are frequently lacking in interpersonal interactions. Additionally, researchers discovered that romantic sentiments for AI were more likely to emerge in those with strong dispositions toward trust.

AI is therefore getting closer to mimicking human interaction, even if it may not completely replace it.